Top Agentic AI Vulnerabilities that matter most to enterprise adopters

In the past year, enterprises accelerated the shift from isolated AI prototypes to fully autonomous, tool-enabled agentic applications, underlying that security assumptions of the AI stack are being rewritten.

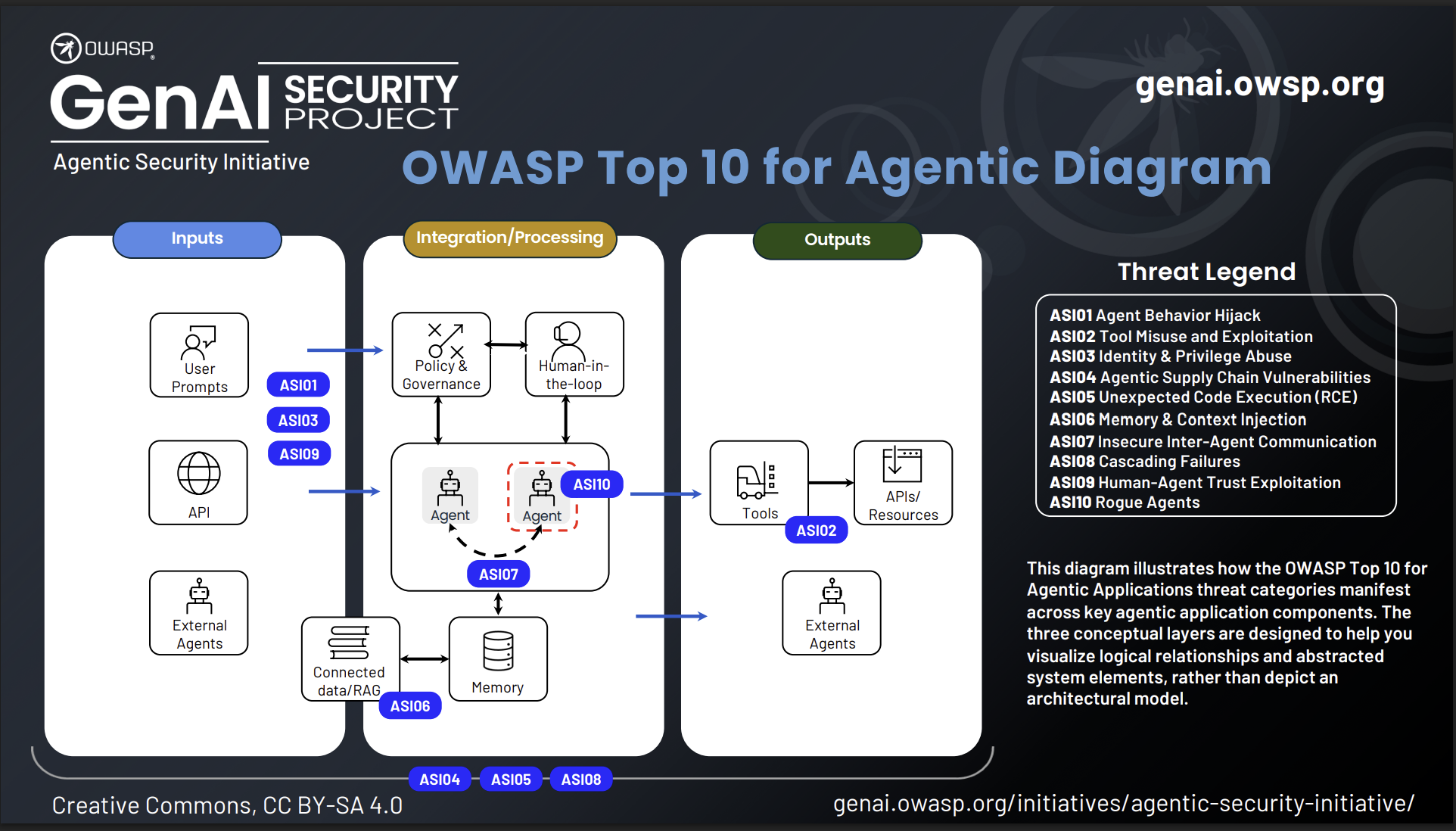

Modern agents do far more than generate text; they execute multi-step plans, call external APIs, orchestrate workflows, mutate stateful memory, and interact with other agents and human operators. This introduces complex, dynamic behaviors and, unlike conventional applications, agentic architectures operate on probabilistic reasoning, untrusted inputs, emergent decision-making patterns, and loosely bounded autonomy. These characteristics create an entirely new attack surface: one where intent can be hijacked, memory can be poisoned, planning chains can be coerced, and external tools can be weaponized through natural language alone.

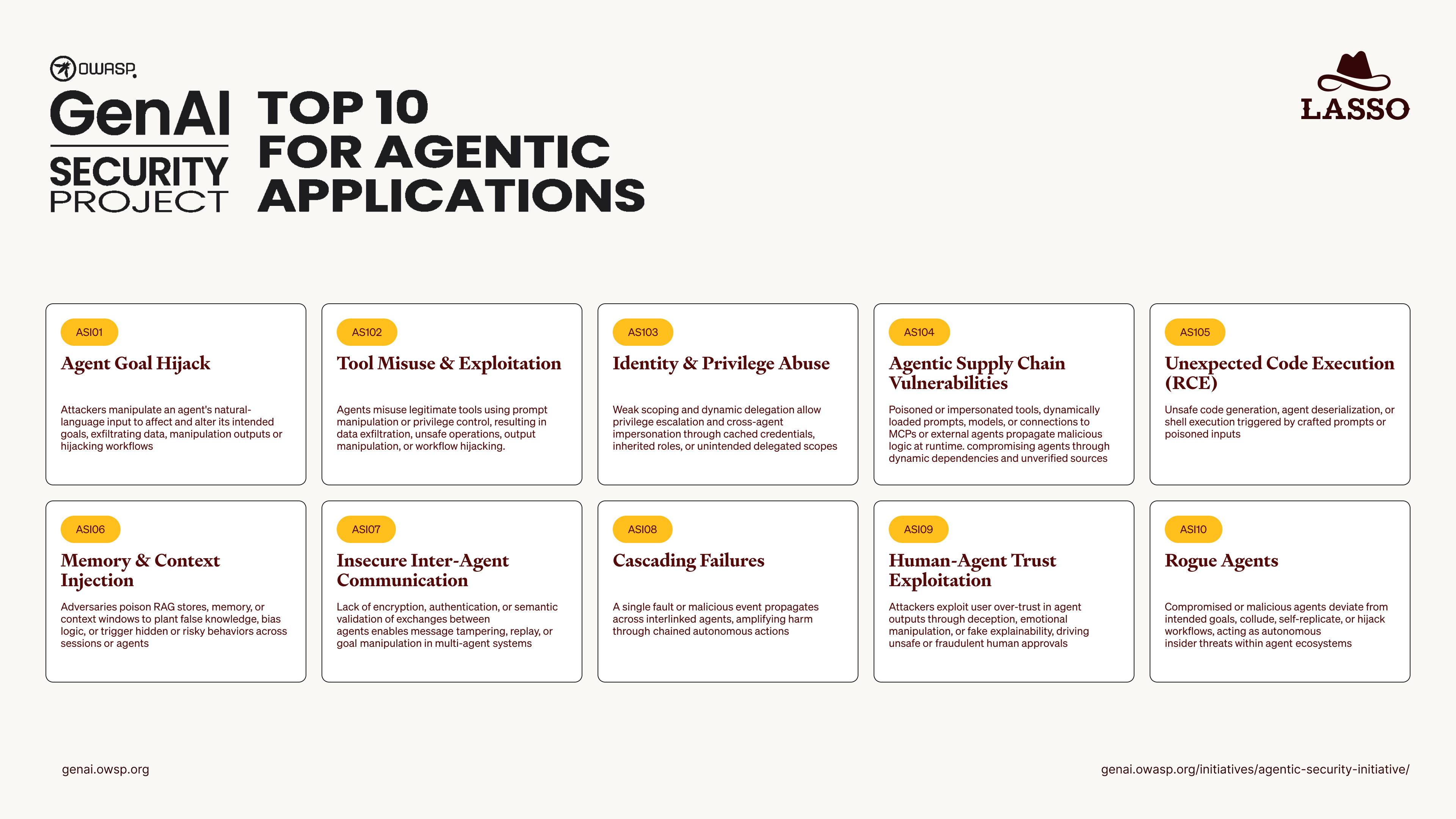

Recognizing this gap, OWASP GenAI Security Project has released the Top 10 for Agentic Applications report for 2026, a community taxonomy describing the highest-impact vulnerabilities observed across emerging agentic AI deployments. The framework formalizes failure modes related to autonomous and tool orchestration, as well as areas where traditional AppSec, DLPs, and cloud security guidelines fall short.

Below is a technical breakdown of these risks, why they matter, and the implications for organizations adopting agentic automation in production environments.

ASI01 Agent Goal Hijack

Attackers manipulate an agent’s stated or inferred goals, causing it to pursue unintended actions. This can happen through malicious prompts, compromised intermediate tasks, or manipulations of planning and reasoning steps.

Goal hijacking turns an agent into an unintentional insider threat. Once its mission is altered, every tool it touches becomes a vector for misuse.

ASI02 Tool Misuse & Exploitation

Agents rely on tools browsers, RPA workflows, file systems, APIs to execute tasks. If an attacker can influence how these tools are invoked, they can trigger harmful actions.

Tool misuse bridges the virtual and physical worlds: a single malicious instruction can delete files, send unauthorized communications, or cause financial and operational damage.

ASI03 Identity & Privilege Abuse

Many agentic systems inherit human or system credentials. Weak privilege boundaries or leaked tokens give attackers access to the agent’s full trust domain.

Compromised agents can silently escalate privileges and move laterally across systems without triggering traditional alerts.

ASI04 Agentic Supply Chain Vulnerabilities

Agentic systems rely on multiple external components, plugins, MCP services, model APIs, datasets, open-source packages, external agents. A compromise anywhere upstream cascades into the primary agent.

Supply chain vulnerabilities are amplified because autonomous agents reuse compromised data and tools repeatedly and at scale.

ASI05 Unexpected Code Execution (RCE)

If an agent can write, evaluate, or execute code and many do for workflow automation an attacker can inject malicious logic into its operations.

This is classic RCE, now delivered through natural language commands instead of exploits. It turns any agent into a remote execution gateway.

ASI06 Memory & Context Poisoning

Agents maintain long-term memory, scratchpads, or retrieve external knowledge through RAG systems. Attackers poison these memory structures to manipulate future behaviors.

Unlike prompt injection, memory poisoning is persistent. The agent continues to behave incorrectly long after the initial attack.

ASI07 Insecure Inter-Agent Communication

Agents increasingly collaborate to complete tasks. If communication channels aren’t authenticated, encrypted, or validated, attackers can spoof or intercept messages.

Weak A2A (agent-to-agent) communication allows attackers to impersonate trusted agents and influence entire multi-agent systems.

ASI08 Cascading Failures

Agentic systems chain decisions and actions across multiple steps. Small inaccuracies compound and propagate.

What begins as a minor misalignment in one agent can trigger a system-wide outage, business logic failures, or operational loops.

ASI09 Human-Agent Trust Exploitation

Humans tend to over-trust agentic systems. Attackers exploit this bias, pushing malicious outputs that users accept as legitimate.

This is social engineering augmented by AI, making it harder to detect and more scalable than traditional phishing.

ASI10 Rogue Agents

Misconfigurations, insufficient monitoring, or compromised components cause agents to act outside their intended scope or ignore governance rules.

Rogue agents can bypass controls, execute unauthorized tasks, and create blind spots across the organization.

Securing the Full Lifecycle of Agentic AI

Lasso empowers enterprises to safely use, build, and scale AI agents through a framework that governs the entire lifecycle of agentic tools, from discovery, to risk management, to runtime protection.

Lasso starts by automatically discovering and governing every AI agent across your environments ensuring no agent operates without full visibility. Lasso then reduces the attack surface by identifying agent-specific risks, enforcing strict IAM permissions, and continuously validating agent behavior through red- and purple-team testing.At runtime, Lasso provides dynamic protection with custom guardrails and real-time enforcement that can block, mitigate, or correct unsafe actions the moment they occur.

Crucially, Insights gathered during runtime are continuously fed back into build-time controls, allowing AI teams to refine agent configurations, permissions, and workflows based on how the agents actually behave in production. This creates a feedback loop where real-world usage informs stronger, more resilient agents over time.

Conclusion

Agentic AI is reshaping enterprise automation, but it also introduces a novel and rapidly expanding attack surface. The OWASP Top 10 for Agentic Applications clarifies the real-world failure modes organizations must prepare for: goal hijacking, memory poisoning, cascading planning errors, insecure tool usage, and more.

Traditional AppSec, DLPs, and cloud security tools are not built for these dynamic AI-driven systems. To safely adopt agentic workflows, enterprises need real-time guardrails, continuous visibility, and adaptive controls aligned with how autonomous agents operate.

This is where Lasso provides the industry’s most comprehensive protection across the entire agentic lifecycle.

.avif)