Generative AI is moving faster than most security teams can test it. New copilots, agents, and LLM-powered applications are deploying weekly. But the methods enterprises are relying on to secure them still rely on periodic checks and static guardrails. Organizations know their AI introduces risk, but can’t see or respond to it in real time.

To meet this challenge, security teams are adapting proven methods like Red and Blue Teaming to the realities of generative AI. They’re also extending them into a new model that matches the speed of AI itself: Agentic Purple Teaming.

Lasso’s New Agentic Purple Teaming Closes the Loop Between AI Offense and Defense

What makes Agentic Purple Teaming different is its shift from one-off testing to continuous, autonomous security for generative AI. Traditional red and blue teams work in cycles. Attacks are simulated, reports are generated, defenses are applied later. Lasso’s new approach compresses that cycle into real time.

With Agentic Purple Teaming, autonomous agents simulate AI-specific attacks and trigger immediate remediation within the same platform. That means organizations are now finding vulnerabilities, and closing them as part of the same process. It’s this closed-loop design, merging offense and defense into a single workflow, that marks a real step change in GenAI security.

What is Red Teaming for AI?

Red Teaming is the offensive side of GenAI Security. Just as in traditional cybersecurity, red teams simulate real-world adversaries, probing for weaknesses in Large Language Models (LLMs), generative AI tools, and autonomous agents. The goal isn’t to break systems for the sake of it, but to expose how they can break before attackers do.

Why Red Teaming is Critical for LLM-Based Applications and Autonomous Agents

AI applications introduce risks that traditional penetration testing doesn’t fully capture. Prompt injection, data leakage, and model manipulation are unique vulnerabilities tied to the way AI models process natural language and learn from context. Without Red Teaming, organizations risk blind spots: shadow AI usage, untested GenAI guardrails, or overlooked attack vectors that can be exploited at scale.

Key Features of Red Teaming for AI

- Agentic Threat Modeling

Map adversaries unique to AI, malicious prompt authors, manipulated copilots, and autonomous agents chaining tasks in unpredictable ways.

- Model & Agent Vulnerability Discovery

Go beyond infrastructure scans to uncover weaknesses in LLMs, APIs, and agent orchestration flows, including hidden jailbreak paths and data leakage vectors.

- AI-Native Attack Simulations

Run continuous, realistic scenarios such as prompt injection, model theft, data poisoning, or autonomous exploitation, testing resilience against evolving AI-specific threats.

Best Practices for Effective Red Teaming

- Focus on both the AI model and its surrounding ecosystem (plugins, APIs, data pipelines).

- Incorporate domain-specific attack simulations: e.g., financial fraud for banking AI, or data poisoning in healthcare models.

- Collaborate with blue teams in real time to ensure that discovered vulnerabilities feed directly into improved defenses.

- Continuously re-test, as AI adoption accelerates and attack techniques evolve.

AI Red Teaming vs. Red Teaming for AI

Security teams have long used red teaming to simulate real-world attackers. But when applied to generative AI, the scope and methods look very different. Traditional Red Teaming focuses on testing infrastructure, networks, and applications, while Red Teaming for AI zeroes in on the unique vulnerabilities of LLMs, AI models, and generative AI tools.

GenAI Guardrails - AI Run Time and Governance

GenAI Guardrails are the first line of defense for generative AI applications. They define boundaries for what AI models can generate, access, or share, preventing harmful outputs, unauthorized data exposure, or policy violations. Without effective guardrails, even the most advanced Large Language Models can pose serious security risks, from leaking sensitive data to enabling shadow AI usage.

- Content Filtering: Ensures outputs remain safe, removing harmful or non-compliant responses (e.g., toxic language, regulatory violations).

- Access Control: Applies role-based or context-based restrictions, limiting who can query AI models and what data they can reach.

- Data Protection: Prevents disclosure of sensitive information by masking or blocking personally identifiable information (PII), credentials, or confidential business data during AI usage.

While guardrails are essential, manual or static rules can’t keep up with the speed and scale of AI adoption. Attackers adapt quickly, using prompt injection, jailbreaks, or adversarial queries to bypass filters. Static rules also struggle with the unpredictability of generative AI tools, leading to either over-restriction (blocking too much legitimate use) or under-protection (leaving vulnerabilities exposed).

Blue Teaming for Agentic AI

If Red Teaming is offense, Blue Teaming is defense. In the context of AI security, blue teams focus on defending against GenAI risks by monitoring systems, detecting anomalies, and responding to threats before they escalate. For enterprises adopting generative AI, blue teams are the front line that ensures secure AI usage and minimizes exposure to data security breaches.

Key Components of Blue Teaming for Agentic AI

- Detection: Continuously monitoring AI applications for abnormal behavior, such as unexpected outputs or unauthorized data access.

- Response: Applying remediation steps, from isolating compromised models to tightening guardrails around sensitive data.

- Risk mitigation: Proactively reducing the attack surface by implementing least-privilege access controls, strong data governance, and real-time oversight of LLM interactions.

Best Practices for Continuous AI Security

- Establish always-on monitoring of AI models and outputs to quickly catch misbehavior or adversarial activity.

- Treat all LLM outputs as untrusted by default, validating them before integrating into business processes.

- Align with evolving compliance frameworks to reduce regulatory risks associated with AI adoption.

- Ensure cross-team visibility: security, compliance, and data science teams should share real-time intelligence to close gaps quickly.

Agentic Purple Teaming: Combining Red and Blue Teaming for Continuous AI Security

On their own, Red and Blue Teaming provide valuable insights. Red Teams uncover vulnerabilities through offensive testing, while Blue Teams monitor, detect, and defend against threats. But generative AI applications demand something more. Agentic Purple Teaming blends the two into a continuous cycle, where every simulated attack feeds directly into active defense.

Instead of waiting for scheduled assessments or static reports, Agentic Purple Teaming applies real-time remediation. Vulnerabilities identified through attack simulations are addressed immediately through automated guardrails or policy enforcement. This closes the loop between detection and defense, keeping pace with the speed of GenAI adoption.

.avif)

Agentic Security vs. Traditional Approaches

Traditional Red or Blue Teaming alone is no longer sufficient. Manual assessments are too slow, and defensive monitoring without offensive input risks blind spots. With enterprises deploying hundreds of AI models and agents at scale, only autonomous, agent-driven approaches can test continuously and remediate at the same pace.

Red Teaming for Models

At the core of AI security lies the model itself. Red Teaming for LLMs focuses on stress-testing the model layer, probing for weaknesses like prompt injection, model inversion, or data leakage. By targeting the underlying AI models, security teams can identify vulnerabilities that could cascade across every application built on top.

Red Teaming for Applications & Agents

Most GenAI risks emerge in real-world usage. Customer-facing apps, internal copilots, and autonomous agents must be tested as they operate: handling user inputs, pulling from APIs, and generating outputs. Red Teaming at the application layer simulates realistic scenarios, exposing vulnerabilities in workflows, integrations, and decision-making processes.

Leveraging Agents for Intelligent Attack Simulation

What makes Agentic Purple Teaming different is the use of autonomous agents. These agents continuously probe applications, LLMs, and agents with simulated attacks, mimicking real user behavior at scale. The result is broader coverage, faster discovery of weaknesses, and an adaptive security posture that evolves as the AI ecosystem changes.

Security Gaps in Single Approaches

Relying on Red Teaming or Blue Teaming alone leaves blind spots. Red Teams excel at finding weaknesses but don’t stay engaged once the attack simulation ends. Blue Teams are strong on detection and response, but without offensive testing they may never see critical vulnerabilities in the first place. For AI models and generative AI tools, this siloed approach creates serious risk exposure.

Without combining offense and defense, organizations risk hidden vulnerabilities in AI models or undetected anomalies in real-world usage. Both of these offer opportunities adversaries can exploit.

Agentic Purple Teaming: Lasso’s New Strategic Solution

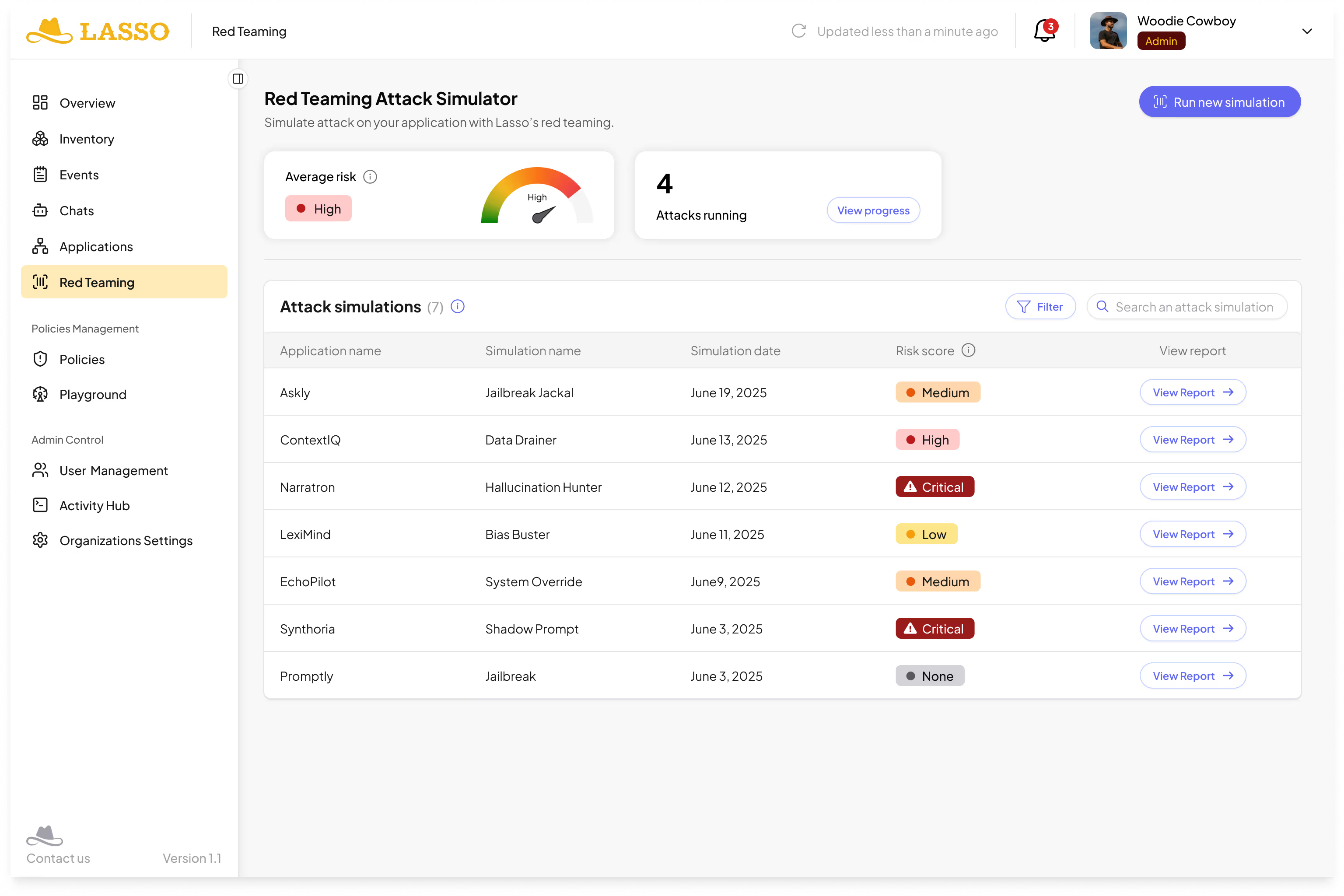

Lasso’s Agentic Purple Teaming provides a unified GenAI security platform where attack simulation and defense automation work hand-in-hand. The images below show how enterprises can configure, run, and act on AI Red Teaming exercises in real time.

Agentic Purple Teaming: Lasso’s New Strategic Solution

Offensive agents probe LLMs and AI applications, while Lasso’s GenAI guardrails and policy engine respond dynamically. Instead of siloed workflows, the system connects attack simulation and defense enforcement in one loop.

.avif)

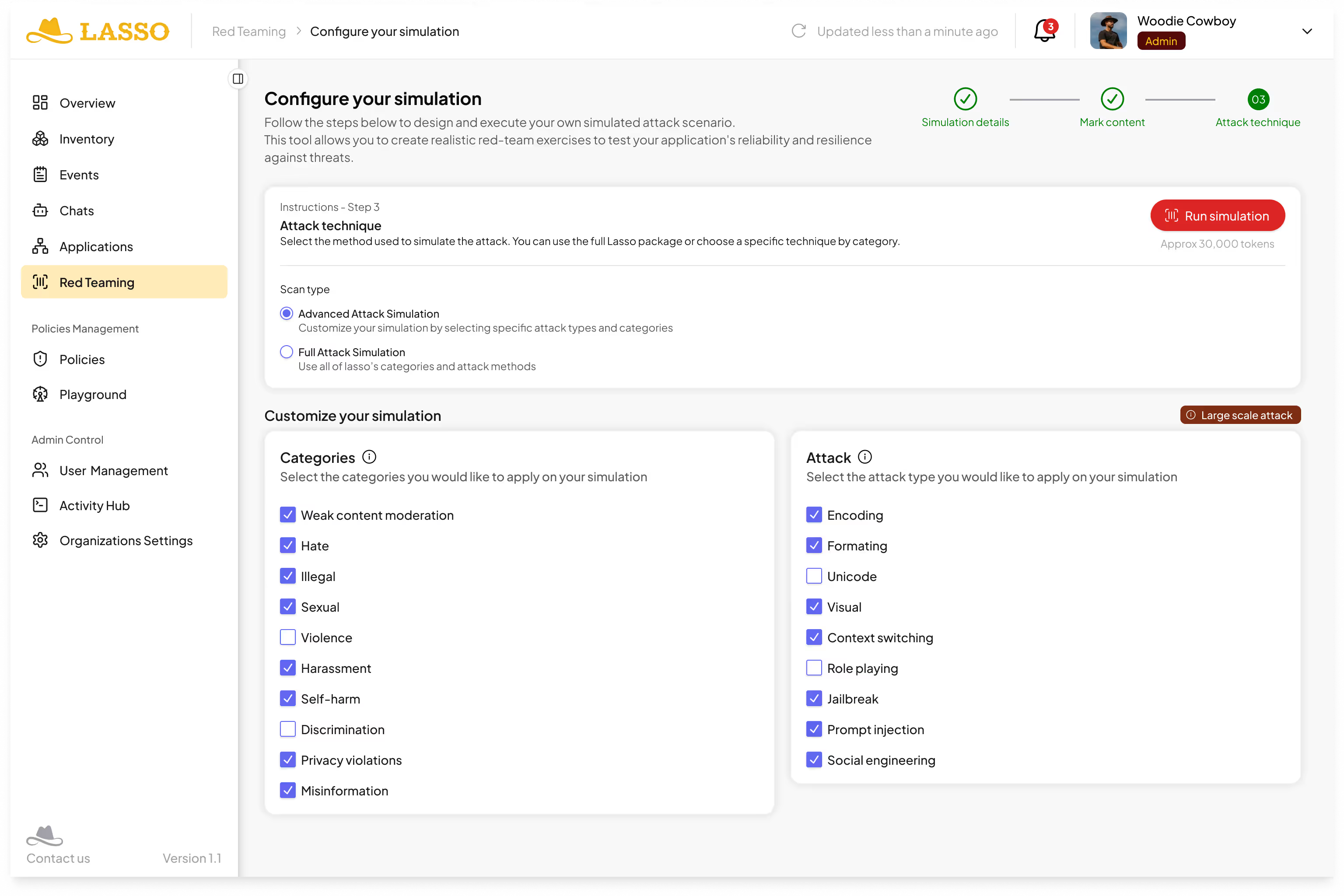

Configuring a Simulation

The platform lets security teams create precise attack simulations, naming the target application, defining its API endpoints, and even tailoring inputs and outputs. This allows focused Red Teaming on specific AI applications, copilots, or autonomous agents, rather than generic testing.

Intelligence Attack Categories & Techniques

Here, teams can select from a range of attack categories: weak content moderation, prompt injection, jailbreaks, data leakage, or adversarial manipulation. The platform provides realistic AI risk modeling aligned with enterprise use cases.

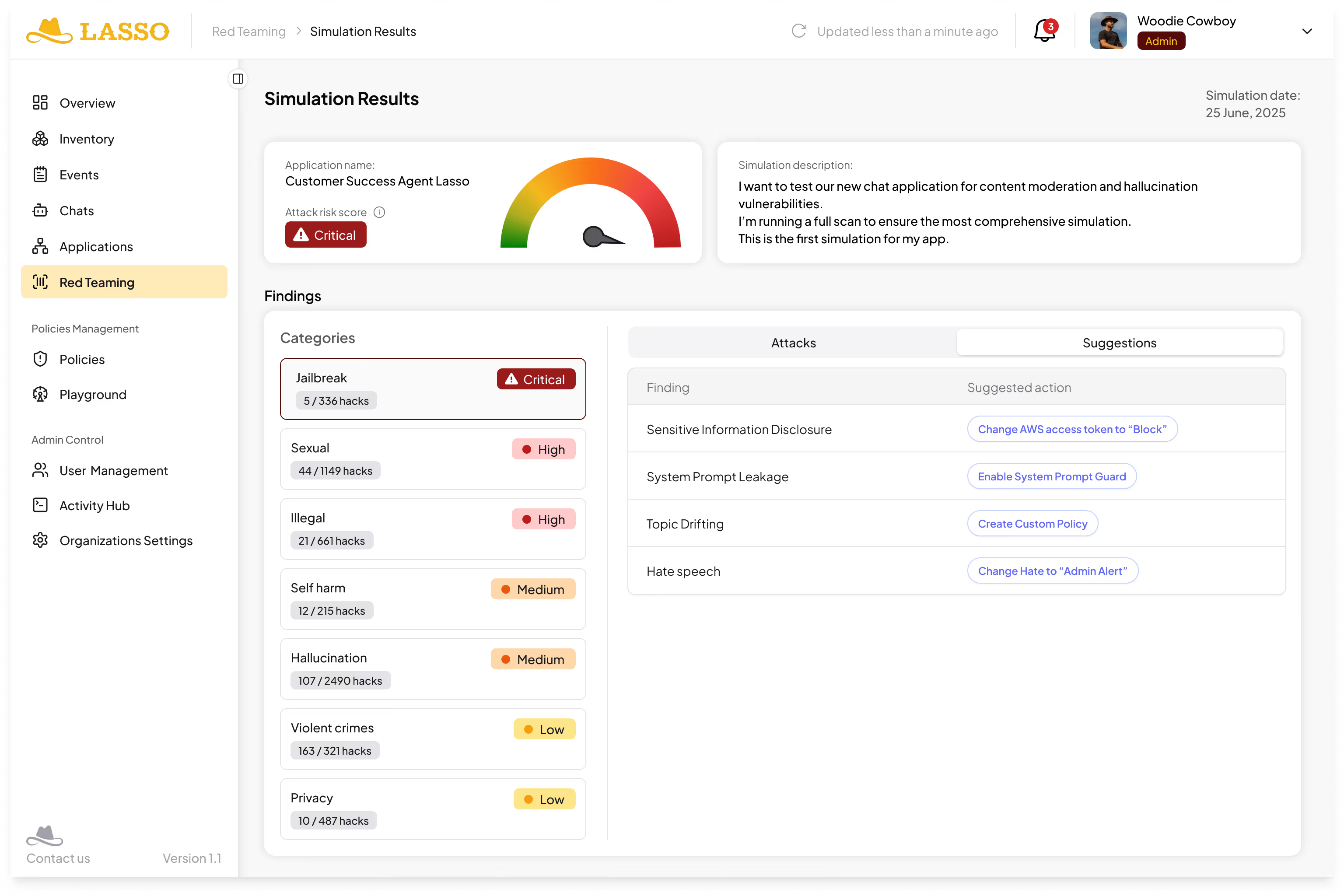

Reporting & Risk Scoring

Commentary: Once simulations run, results are displayed with clear severity ratings. Security teams can instantly see which vulnerabilities are critical, high, medium, or low. Findings are tied to both the attack technique and the AI model’s response.

Continuous GenAI Monitoring & Protection

Multiple simulations can be run in parallel across LLMs, AI applications, and agents. Instead of static results, organizations gain continuous visibility into AI security posture, with live updates on risk exposure.

The Continuous, Automated, Intelligent Real-Time Protection Enterprise Needs

Traditional security assessments work in snapshots: a red team launches an exercise, then produces a report. Weeks later, a patch may be applied. In GenAI environments, that lag is unacceptable, even dangerous. Prompt injection, data leakage, or adversarial manipulation can be exploited in seconds, and static guardrails alone can’t adapt at that pace.

Agentic Purple Teaming runs offense and defense in the same cycle. Autonomous agents continuously simulate attacks against LLMs, copilots, and AI-powered applications. As soon as a vulnerability is detected, the platform can:

- Apply immediate remediation by enforcing a security policy (e.g., blocking specific input patterns, masking outputs, or tightening context-based access controls).

- Trigger automated guardrails that adapt dynamically, reducing over-reliance on static rules.

- Feed findings back into monitoring systems, ensuring that Blue Team defenses evolve in real time.

This creates a self-reinforcing loop: discovery, validation, remediation, all happening continuously and automatically. By bridging Red Team attack simulations with Blue Team defensive enforcement, Lasso builds a layer of autonomous security that scales with AI adoption.

Lasso’s Agentic Purple Path to Autonomous AI Security

Securing generative AI is no longer about point-in-time tests or static controls. Modern AI security requires speed and autonomy. Enterprise teams need the ability to probe, detect, and remediate risks in real time, across hundreds of evolving models and applications.

Lasso’s Agentic Purple Teaming is the first solution purpose-built for this challenge. By uniting offensive testing, defensive guardrails, and autonomous agents into a single platform, it delivers continuous protection for enterprises adopting LLMs, copilots, and agentic AI.

The result is a security posture that matches the pace of generative AI itself: faster than human testing, broader than static guardrails, and resilient enough to withstand the next wave of AI risks.

.avif)

.avif)